Fake B2B AI Tools Are the New Bait—and Businesses Are Falling for It

Let’s be honest—many of us rely on AI tools to get things done faster, smarter, and better. But what if the very tools you trust could be turned against you? It sounds like a tech thriller, but it’s actually happening. And it's getting worse.

A new report from Cisco’s threat intelligence team shows that cybercriminals have found a sneaky new trick: creating fake versions of popular business AI tools to spread ransomware and malware. They’re getting smarter—and bolder.

We dug into what’s really going on, how these attacks work, and why it’s a growing problem for companies depending on AI to run smoothly.

- Hackers are copying well-known AI tools like NovaLeadsAI, ChatGPT, and InVideo. But these copies are fake and dangerous—they’re booby-trapped with ransomware and malware.

- They use SEO tricks (called SEO poisoning) to push their fake sites to the top of Google results. So if you’re not careful, you might click the wrong download link and get hit.

- The CyberLock ransomware demands a ransom of $50,000 in Monero crypto. To make it worse, the attackers lie and say the money helps with humanitarian causes. It's manipulative—and cruel.

- Another one, Lucky_Gh0$t, goes after your files in a terrifying way. It scrambles small ones and completely destroys large ones. There’s no coming back from that.

- Numero malware messes with your Windows interface so badly that your computer becomes useless. You’re left staring at a frozen screen, not knowing what just happened.

How to Protect Yourself

This is a wake-up call for businesses: don’t trust just any download link. Only get tools from official sources—no shortcuts. Also, make sure you're using strong security practices like multi-factor authentication (MFA), updated antivirus, and endpoint protection.

Fake AI tools are more than just a scam—they’re a trap. And in today’s fast-moving tech world, it’s easy to get caught. Stay sharp, stay cautious, and don’t let cybercriminals turn your productivity tools into weapons.

AI Tool Cloning: A Sneaky New Threat We Didn’t See Coming

When AI really took off, many of us started worrying about deepfakes — those fake videos and images that look so real, they’re almost impossible to spot. But while we’ve been focused on figuring out what’s real and what’s not online, cybercriminals seem to be taking a different — and frankly scarier — path.

Instead of faking people’s faces, they’re now faking something many of us trust without question: AI-powered business tools.

Just last month, Cisco Talos raised the alarm about a growing number of fake AI tools pretending to be legit software. These aren’t just poorly made copies — they’re polished, convincing, and designed to trick even the most careful users.

Researchers found that well-known ransomware groups like CyberLock and Lucky_Gh0$t — along with a dangerous new malware strain nicknamed “Numero” — are being spread online, all disguised as popular AI tools. They look like helpful downloads, but once you install them, you’re basically handing your system over to hackers.

What’s worse? These fake tools are showing up in search engine results and even on social media platforms like Telegram — thanks to shady SEO tactics like “SEO poisoning,” which pushes fake links to the top of your search.

And it’s not random. The malware is posing as trusted tools many businesses already know and use, like NovaLeadsAI, ChatGPT, and InVideo AI. Why? Because hackers know these tools are in demand, and they’re counting on our curiosity — or even just one tired click — to do the rest.

Cybercriminals Use Fake Installers of NovaLeadsAI, ChatGPT, and InVideo to Spread Malware

In a troubling development, hackers have started targeting popular AI tools that many businesses trust and use daily. By creating fake installer campaigns for NovaLeadsAI, ChatGPT, and InVideo, they’re preying on people’s curiosity and need for productivity—only to infect their systems with dangerous malware.

Fake NovaLeadsAI Installer

Researchers at Talos uncovered that the CyberLock Ransomware gang built a fake version of the NovaLeadsAI website—so convincing, it could easily fool someone in a hurry or unfamiliar with the real site.

What’s scary is what happens next. When users download what they think is a helpful AI tool (packaged in a ZIP file), they’re actually launching a hidden ransomware attack. As soon as the installer runs, it silently encrypts all the user’s files and holds them hostage, demanding a ransom. Imagine the panic and helplessness you'd feel watching your work disappear behind a digital lock.

|

| Fake lookalike NovaLeadsAI website with a clickbait button. Source: Cisco Talos |

Fake ChatGPT Installer

In another campaign, attackers targeted ChatGPT users with a malicious ZIP file disguised as “ChatGPT 4.0 full version – Premium.exe.” It sounds tempting—who wouldn't want the newest version of one of the most powerful AI tools out there?

But instead of getting advanced AI help, victims unknowingly install the Lucky_Gh0$t ransomware. To make the trap more convincing, the ZIP file even includes real Microsoft open-source AI files. It’s a clever trick to avoid detection, but for the user, it’s a nightmare—unexpected, confusing, and deeply frustrating.

|

| Lucky_Gh0$t ransomware fake installation ZIP file contents. Source: Cisco Talos |

Fake InVideo AI Installer

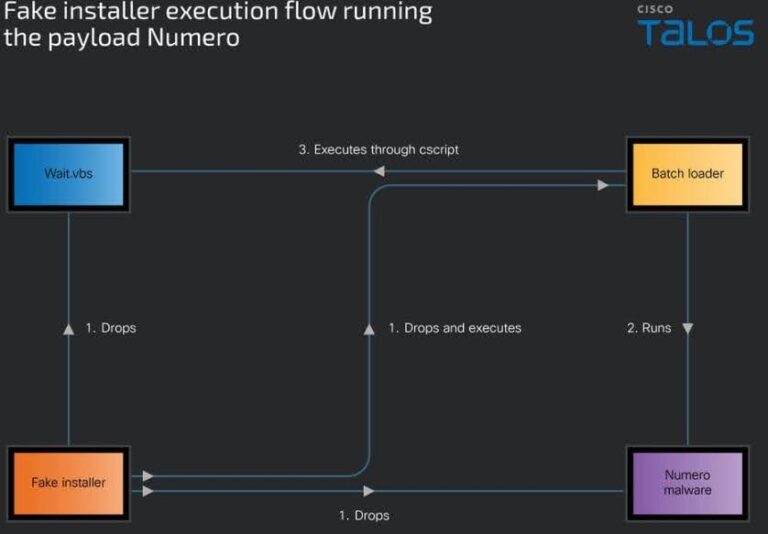

The third campaign focuses on InVideo, a popular AI video tool. Talos found that cybercriminals are using a new type of malware they’ve dubbed “Numero.” This fake installer contains a mix of harmful scripts and files designed to quietly attack the victim’s computer.

Once installed, the malware tampers with the Windows interface itself. Victims often find their screens distorted, apps misbehaving, and systems barely usable. It’s not just an inconvenience—it’s a complete disruption of your digital life.

|

| Fake installer execution flow running Numero payload. Source: Cisco Talos |

How Organizations Are Affected

For many ransomware groups, it all comes down to one thing: money. These cybercriminals are constantly looking for their next payday—and more often than not, they find it in small and medium-sized businesses. Why? Because these companies are diving headfirst into the world of AI, often without fully understanding the risks.

Researchers at Cisco Talos have found that a lot of these businesses, eager to adopt AI tools and stay ahead of the competition, are unknowingly walking into traps. Hackers are disguising malicious software as helpful AI tools, and many unsuspecting companies are falling for it.

Take CyberLock ransomware, for example. It tricked people by offering what seemed like a generous gift: a free one-year subscription to a tool called NovaLeadsAI. But that “free” gift came with a hidden price. Instead of getting access to a powerful AI tool, the moment users installed the program, they unknowingly unleashed ransomware on their systems.

Then came the emotional twist. Once the damage was done, the attackers demanded a hefty ransom—$50,000, and only in Monero cryptocurrency. To make things worse, they tried to manipulate their victims by claiming the money would go to support humanitarian causes in places like Palestine, Ukraine, Africa, and Asia. But Cisco Talos investigators couldn’t find any sign that the malware actually stole data or sent it anywhere. It was all part of the psychological game.

Even worse, not all malware uses the traditional approach of locking files. Some are more insidious. For instance, the Numero malware doesn’t encrypt anything. Instead, it destroys the Windows interface entirely, leaving users helpless and locked out of their own systems. It’s a different kind of chaos—quiet, brutal, and incredibly disruptive.

These attacks don’t just hurt a company’s bottom line. They leave people feeling violated, blindsided, and overwhelmed. When you're just trying to grow your business and build something meaningful, falling victim to one of these schemes can feel like the rug has been pulled out from under you.

How Businesses Can Spot & Stay Safe from Fake AI Tools

Let’s face it — the buzz around AI tools is huge right now, and cybercriminals know it. They’re using this hype to trick businesses into downloading fake AI tools loaded with harmful software. So how can you protect your company?

The first and most important step is to only download software from trusted, official sources. But in today’s digital world, that alone isn’t enough. According to experts at Cisco Talos Threat Intelligence Group, here are some other smart moves to keep your business safe:

1. Use Endpoint Protection Software

Even the most careful person can be fooled. An endpoint protection tool acts like a safety net — it helps spot and stop harmful software, even if someone accidentally downloads a fake AI app.

2. Defend Your Email

A lot of threats come through email — one wrong click, and malware slips in. Email threat defense tools can block those sketchy messages before they even reach your inbox.

3. Turn on Your Firewall

Think of a firewall as a digital security guard. It watches for suspicious behavior, like dodgy links or strange downloads, and blocks them before damage is done.

4. Use a Secure Internet Gateway

Whether your team is working from the office or at home, a secure internet gateway keeps everyone from landing on dangerous websites or connecting to harmful servers.

5. Follow the "Least Privilege" Rule

Give employees access only to what they truly need. This limits the risk if someone’s account ever gets compromised — it's like locking doors inside a house to keep valuables safer.

6. Enable Multi-Factor Authentication (MFA)

Passwords alone just don’t cut it anymore. MFA adds an extra step to logging in, making it way harder for attackers to break in — even if they steal a password.

The Bottom Line

The truth is, cybercriminals are quick — and right now, the excitement around AI tools is the perfect cover for them to launch attacks. What makes it scarier? Some of the tools we once trusted are now being used as traps.

Businesses need to stay sharp. It’s time to rethink how we approach digital safety. This means more than just using antivirus — it means training your team to recognize risks, double-checking every download, and building a strong, layered defense system.

At the end of the day, it’s better to be cautious than sorry. If a new AI tool looks too good to be true, it probably is.

- Cybercriminals camouflaging threats as AI tool installers (Blog.talosintelligence)

%20(18).jpg)