Exploring the Frontier of Generative AI: A Practical Guide to Rapid Innovation

Generative AI has emerged as a captivating technology, evolving significantly in recent years and offering innovative solutions to user challenges. Given its relative novelty in practical applications, navigating the initiation of projects with LLMs (large language models) can pose initial hurdles. We're thrilled to share our approach to leveraging generative AI for problem-solving and accelerating feature launches using this transformative technology.

Our strategy for building with LLMs involves a structured approach across several key stages. Beginning with product ideation and requirement definition, we clarify our objectives and assess user benefits. Next, we progress through prototyping, iteratively refining our concept based on small-scale experiments. Finally, we proceed to full-scale product deployment. In this post, we'll delve into each stage of this process, offering detailed insights and practical guidance.

How we identify use cases for generative AI

At the heart of our approach lies a deep consideration for both our users and our team members. We begin by asking: how can generative AI unlock new opportunities to address critical needs? Like all forms of machine learning, generative AI is a versatile tool that should be applied judiciously, only when it offers distinct advantages over other methods.

To pinpoint where generative AI can truly shine, we focus on challenges characterized by:

- The need to analyze, interpret, or review vast amounts of unstructured content, such as text, on a large scale.

- Requirements for significant scaling that may be otherwise impractical due to resource limitations.

- Situations where conventional rules-based or traditional machine learning approaches fall short.

By identifying these specific scenarios, we can harness the power of generative AI strategically and effectively, leveraging its unique capabilities to solve complex problems and drive impactful outcomes.

Defining Product Requirements

After identifying a potential use case for a generative AI application, the next crucial step is defining the product requirements with careful consideration. This phase involves selecting the most appropriate Large Language Model (LLM) and framing our problem effectively as a prompt for the LLM.

Key aspects we evaluate include:

- Latency: How quickly must the system respond to user input?

- Task Complexity: What level of comprehension is needed from the LLM? Is the input context highly specific to a particular domain?

- Prompt Length: How much context is necessary to provide for the LLM to perform its task effectively?

- Quality: What level of accuracy is deemed acceptable for the generated content?

- Safety: How critical is it to sanitize user input or prevent the generation of harmful content and prompt manipulation?

- Language Support: Which languages must the application be able to handle?

- Estimated QPS (Queries Per Second): What throughput does our system ultimately need to support?

These factors often present trade-offs, especially regarding complexity, prompt length, and quality versus low latency. Larger, more capable LLMs generally yield superior results but may operate slower during inference due to their size. Therefore, if minimizing response time is crucial, options include investing in higher compute resources or accepting reduced quality by utilizing smaller models. This balancing act ensures optimal performance aligned with specific project requirements and constraints.

From Concept to MVP: Prototyping AI Applications

Navigating AI Prototype Development: Selecting, Evaluating, and Launching

Choosing the right product requirements guides our selection of off-the-shelf Large Language Models (LLMs) for prototyping. We often opt for advanced commercial LLMs to swiftly validate ideas and gather early user feedback. While costly, the premise is clear: if cutting-edge foundational models like GPT-4 can't adequately address the problem, current generative AI tech may fall short. Leveraging existing LLMs allows us to focus on iterating our product rather than building and maintaining ML infrastructure.

Evaluating Prompts

A crucial step is crafting effective prompts. We start with basic instructions for our chosen LLM (e.g., ChatGPT) and refine wording to clarify tasks. However, optimizing prompts can be challenging to gauge. Evaluating prompts is key—utilizing AI-assisted evaluation alongside performance metrics helps identify prompts yielding higher-quality outputs. This method, akin to actor-critic reinforcement learning, uses GPT-4 to automatically critique AI outputs against predefined criteria, streamlining prompt refinement.

Launch and Learn

Once confident in prompt quality, we deploy a limited product release (e.g., A/B test) to observe performance in action. Metrics vary by application—our focus is on user behavior and satisfaction, informing rapid improvements. For internal tools, efficiency and sentiment metrics guide enhancements; consumer-facing apps prioritize user feedback and engagement.

Monitoring system health metrics (e.g., latency, throughput, error rates) highlights structural issues in LLM-generated outputs. Understanding post-processing needs for scalable deployment hinges on data parsing and format consistency.

Cost considerations are paramount. Assessing initial token usage informs scalability costs—vital for future planning.

This holistic approach ensures our product meets expectations and adds value. Success signals readiness for full-scale deployment; failure prompts iterative refinement and reevaluation.

Deploying at Scale

LLM Application Architecture

Building Apps with LLMs: Architectural Essentials for Quality and Safety

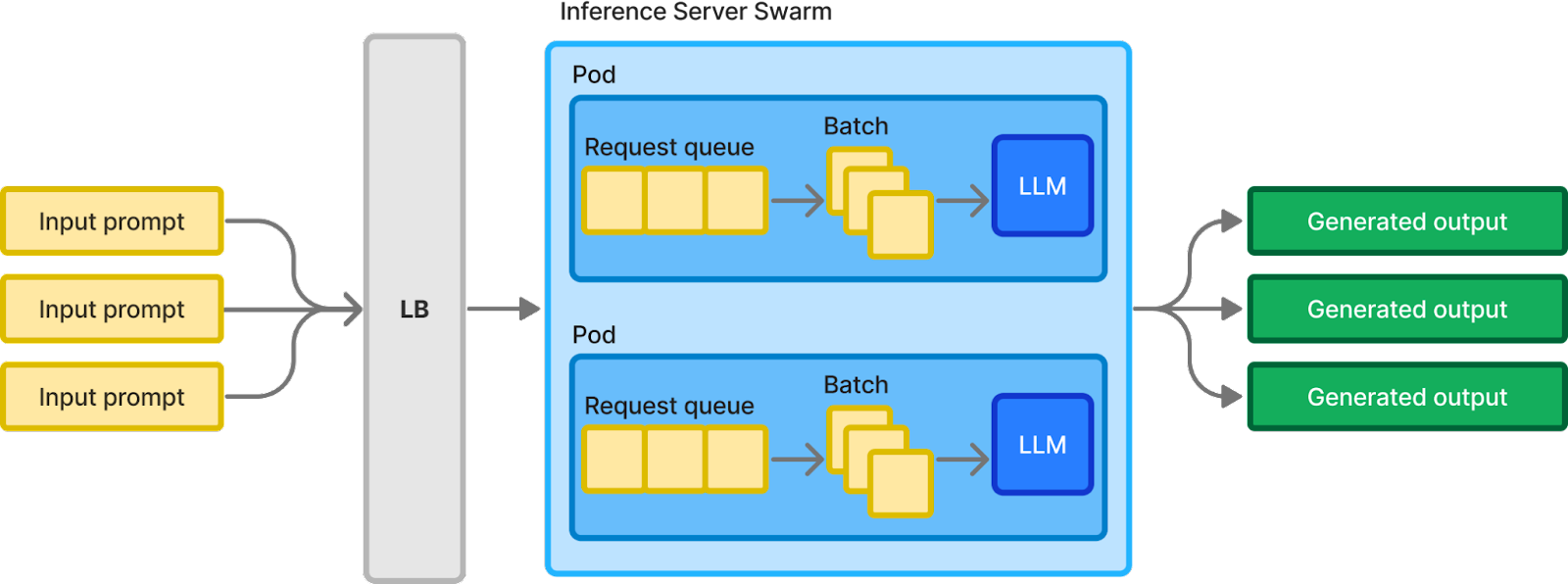

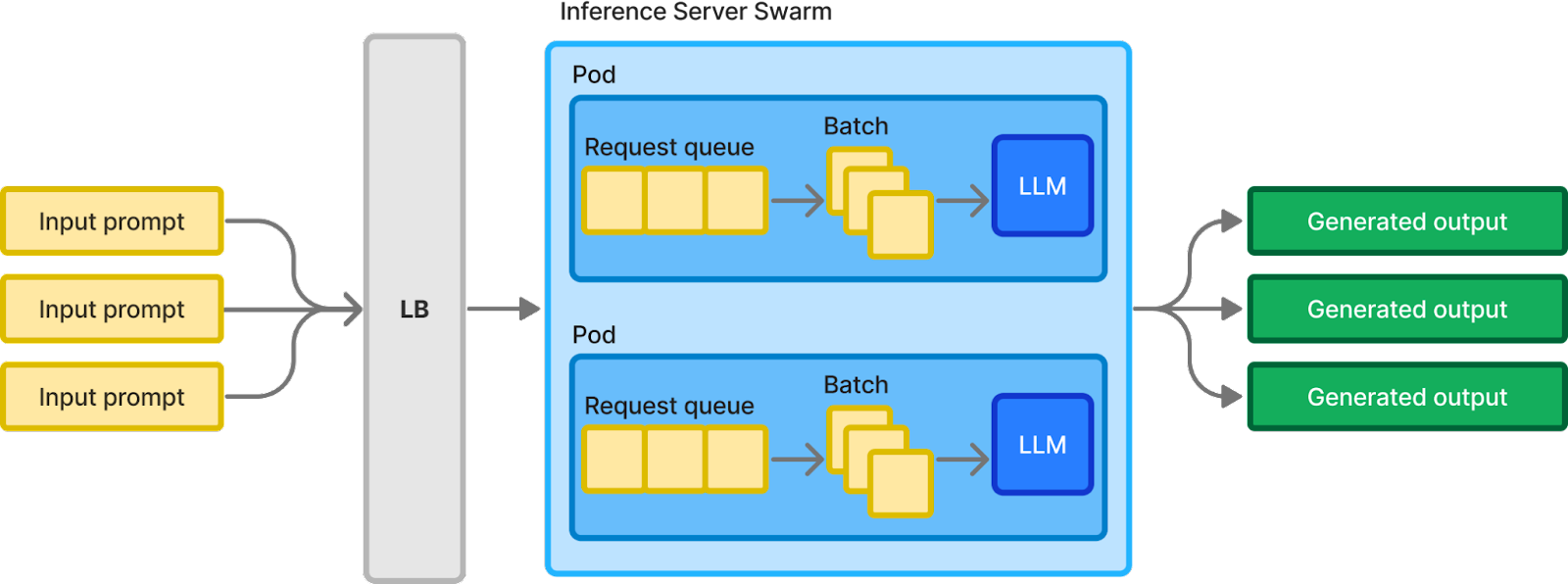

Developing applications leveraging Large Language Models (LLMs) involves key components carefully orchestrated for optimal performance. Inputs are meticulously crafted into prompts, rigorously tested, and evaluated across diverse scenarios. Central to this architecture is the LLM inference server, responsible for executing LLM operations and generating responses swiftly. Commercial examples of such servers, like ChatGPT and other OpenAI GPT APIs, excel in delivering content with minimal latency.

Prioritizing user experience, privacy, and safety, we collaborate with cross-functional partners, including Legal and Safety teams, to implement robust mitigations aligned with privacy principles such as data minimization. For instance, we integrate content safety filters into the inference server's output to preempt undesired material from user access. Utilizing in-house or third-party trust and safety ML models enhances our ability to detect and address inappropriate content effectively.

This cohesive system harnesses LLM capabilities efficiently while upholding stringent standards for content quality and safety, ensuring the delivery of a superior end product that aligns with our commitment to user satisfaction and responsible AI deployment.

Self-hosted LLMs

Exploring Self-Hosted LLMs: Balancing Costs and Control

When considering the integration of LLM-driven features, we navigate a landscape of trade-offs in designing our LLM inference server, weighing costs against engineering effort. While leveraging commercial LLMs provides access to superior models without the burden of setup, expenses can escalate swiftly. For privacy considerations, processing data entirely in-house may be preferable. Self-hosting an open-source or custom fine-tuned LLM emerges as a solution, promising significant cost reduction—but at the expense of additional development time, maintenance overhead, and potential performance implications. Careful consideration of these trade-offs is essential.

Recent advancements in open-source models like Llama and Mistral offer impressive results from the outset, even for intricate tasks traditionally requiring tailored model training. Nonetheless, for domain-specific or complex tasks, fine-tuning may still be necessary to achieve optimal performance. Starting with smaller models and scaling up only when quality demands it has proven effective.

Setting up the requisite machine learning infrastructure presents another challenge. We require a dedicated model server for inference (leveraging frameworks like Triton or vLLM), robust GPUs to ensure performance, and server configurability to optimize throughput and minimize latency. Tuning inference servers for peak performance is task-specific; the ideal configuration hinges on the models used, input/output token lengths, and batch processing efficiency to maximize throughput. This strategic approach empowers us to harness LLM capabilities efficiently, tailoring infrastructure to match project requirements while controlling costs and maintaining performance integrity.

Final Reflections

Looking to the future, it's evident that generative AI will continue to play a pivotal role in tackling large-scale, business-critical challenges. Navigating the delicate balance between cost, engineering effort, and performance will undoubtedly pose ongoing challenges. We eagerly anticipate and actively contribute to the rapid evolution of technology and tools designed to optimize this balance, paving the way for more effective problem-solving in the years ahead!